AI Agents Can Learn New Physical Skills On Their Own

Options

2stepz_ahead

Guests, Members, Writer, Content Producer Posts: 32,324 ✭✭✭✭✭

http://www.tomshardware.com/news/openai-competitive-self-play-training-strategy,35671.html

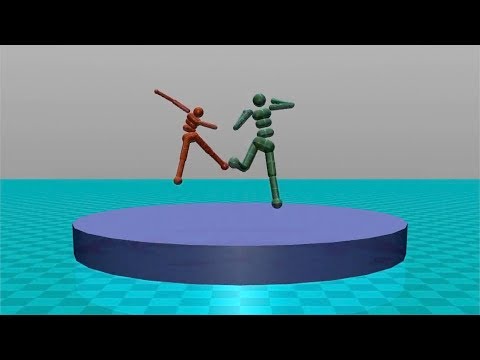

OpenAI, an AI research nonprofit founded by Elon Musk and a few others, has been experimenting with giving AI agents some goals in a set of basic games and then allowing them to play against each other to win. The team discovered that this has led to the AI agents learning physical skills such as tackling, ducking, faking, kicking, catching, and diving for the ball, all on their own.

OpenAI calls this type of training for AI agents “competitive self-play.” The nonprofit set up various competitions between simulated 3D robots and gave them goals such as pushing an opponent out of a sumo ring, or reaching the other side of the ring, while preventing the opponent from doing the same, kicking the ball into the net while preventing the opponent from doing so, and so on.

The agents initially receive dense rewards for the simple acts of standing or moving forward. These rewards are gradually reduced to zero, in favor of being rewarded only for winning or losing the games in which they are playing. Each agent’s neural network policy is independently trained.

In the sumo fighting game, the agents would first be rewarded for exploring the ring, but eventually they would receive a reward only for pushing the opponent out of the ring. In a simple game such as this one, a virtual agent could be “programmed” to do all of those things, too, but the code would be much more complex and it would largely depend on the designer to get everything right.

However, with these new AI systems, the agents could figure out what they need to do to achieve their objective largely on their own, after thousands of attempts playing against improved versions of themselves. OpenAI used a similar strategy to train the Dota AI it created earlier this year, which ended up winning against the top Dota human players.

Transfer Learning

The AI agents can not only learn to master a certain game or environment, but they can also transfer the knowledge and skills they gain in one game to another. The OpenAI team decided to test “wind” forces against two different AI agents, neither of which had experienced wind before. One AI was trained to walk using classical reinforcement training, while another was trained via self-play in the sumo fighting game.

The first AI was knocked over by the wind, while the second one, using its skills to push against an opposing force, was able to resist the wind and remain standing. This showed that an AI agent could transfer its skills to other similar tasks and environments.

At first the agents were overfitting by co-learning policies that were precisely tailored for one specific opponent, and they would fail when facing an opponent with different characteristics. OpenAI team solved this issue by pitting the agents against different opponents. Being "different" in this case means the agents were using policies that were trained in parallel or policies from earlier in the training process.

OpenAI is now increasingly more confident that self-play will be a core part of powerful AI systems of the future. The group released the MuJoCo environments and trained policies used in this project so that others can do their own experiments with these systems. OpenAI also announced that it’s currently hiring researchers interested in working on self-play systems.

https://www.youtube.com/watch?v=OBcjhp4KSgQ&feature=youtu.be

https://www.youtube.com/watch?v=OBcjhp4KSgQ&feature=youtu.be

OpenAI, an AI research nonprofit founded by Elon Musk and a few others, has been experimenting with giving AI agents some goals in a set of basic games and then allowing them to play against each other to win. The team discovered that this has led to the AI agents learning physical skills such as tackling, ducking, faking, kicking, catching, and diving for the ball, all on their own.

OpenAI calls this type of training for AI agents “competitive self-play.” The nonprofit set up various competitions between simulated 3D robots and gave them goals such as pushing an opponent out of a sumo ring, or reaching the other side of the ring, while preventing the opponent from doing the same, kicking the ball into the net while preventing the opponent from doing so, and so on.

The agents initially receive dense rewards for the simple acts of standing or moving forward. These rewards are gradually reduced to zero, in favor of being rewarded only for winning or losing the games in which they are playing. Each agent’s neural network policy is independently trained.

In the sumo fighting game, the agents would first be rewarded for exploring the ring, but eventually they would receive a reward only for pushing the opponent out of the ring. In a simple game such as this one, a virtual agent could be “programmed” to do all of those things, too, but the code would be much more complex and it would largely depend on the designer to get everything right.

However, with these new AI systems, the agents could figure out what they need to do to achieve their objective largely on their own, after thousands of attempts playing against improved versions of themselves. OpenAI used a similar strategy to train the Dota AI it created earlier this year, which ended up winning against the top Dota human players.

Transfer Learning

The AI agents can not only learn to master a certain game or environment, but they can also transfer the knowledge and skills they gain in one game to another. The OpenAI team decided to test “wind” forces against two different AI agents, neither of which had experienced wind before. One AI was trained to walk using classical reinforcement training, while another was trained via self-play in the sumo fighting game.

The first AI was knocked over by the wind, while the second one, using its skills to push against an opposing force, was able to resist the wind and remain standing. This showed that an AI agent could transfer its skills to other similar tasks and environments.

At first the agents were overfitting by co-learning policies that were precisely tailored for one specific opponent, and they would fail when facing an opponent with different characteristics. OpenAI team solved this issue by pitting the agents against different opponents. Being "different" in this case means the agents were using policies that were trained in parallel or policies from earlier in the training process.

OpenAI is now increasingly more confident that self-play will be a core part of powerful AI systems of the future. The group released the MuJoCo environments and trained policies used in this project so that others can do their own experiments with these systems. OpenAI also announced that it’s currently hiring researchers interested in working on self-play systems.

https://www.youtube.com/watch?v=OBcjhp4KSgQ&feature=youtu.be

https://www.youtube.com/watch?v=OBcjhp4KSgQ&feature=youtu.be Comments

-

Something about dude's "visions" annoys me.

Seems like the kind of dude to fast forward the "end of the world" type ? , while a wealthy MF like him makes plans for the post apocalypse.